In our February webinar (watch the recording), Forward Action’s Beth Miles (Digital Strategy Manager), went through everything you need to know to crack A/B testing to supercharge your digital campaigns.

First thing’s first – What is A/B testing?

An A/B test is where you compare two or more versions of something (eg. a webpage, email or Facebook ad) to see which performs better. Users are shown the versions at random, and statistical analyses are used to determine which gets more results.

Ok, but why test?

Three reasons:

- You get more results (eg. donations, email addresses, signatures etc) for the same effort. If you don’t optimise, you are losing out on crucial conversions!

- By improving your content, you are providing a better experience for your audiences.

- Find out what actually works rather than relying on your instincts. Testing also helps you resolve any internal debate about what might work better.

You’ve convinced me, but what sort of things can I test?

This is a big one, because there are so many. Opt-in copy, number of form fields, image choice, image placement, button copy, ad creative…the list goes on. This can be a bit daunting and a barrier to getting started. So it’s good to be systematic, and prioritise your testing with a step-by-step process.

The step-by-step process to A/B testing:

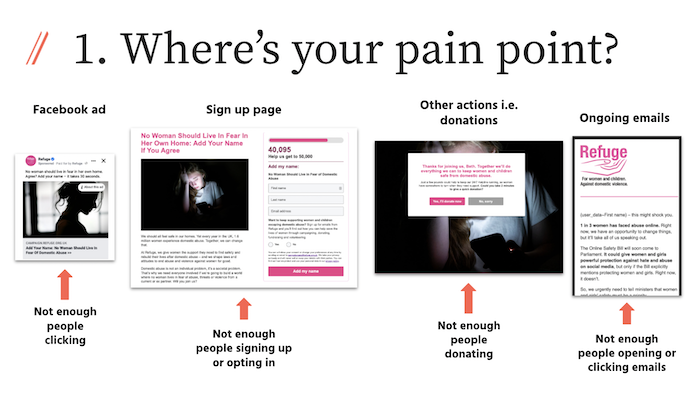

1. Identify your problem

Instead of starting with specific things you could test, start with identifying a problem you’d like to solve. One way to think about this is to find your ‘pain point’.

For example, it could be you’re getting a high click-through to your donation page but a lot of people are bouncing off the page and not completing it. Testing your donate page structure could be a good place to start.

Or, perhaps your emails have a healthy open rate, but a low conversion rate. You probably want to think about testing the copy, template or buttons in your emails.

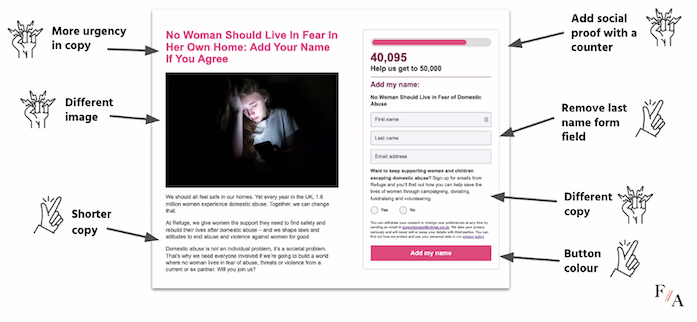

2. Decide what you could test

Once you’ve identified your problem, look critically at your content in question and make a list of the elements relating to that problem you can test.

Broadly, there are two levers you can pull to increase actions: (i) making people want to do the action more, or (ii) making it easier for people to take the action.

Increasing people’s motivation might be achieved with messaging/framing; appeal to emotions; showing the impact; social proof; urgency and more. Making actions easier could involve removing extra steps; asking the user to do one thing at a time; more accessible or succinct copy; simpler and mobile optimised-pages, among other things.

You might want to take a look at other organisations for inspiration, and we’ve even got a lot of our previous test results on our blog.

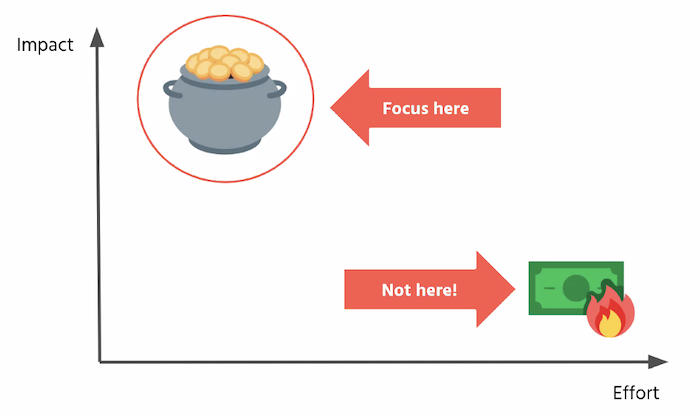

3. Prioritise your ideas

Now you’ve got a bunch of ideas, it’s time to prioritise. You want to choose tests that are in the sweet spot of relatively low effort, but you think could have high impact. We use an impact/effort matrix to rank potential tests.

There are some potential tests that you can reasonably expect are more likely to make a bigger impact than others when creating a priority list. For example, your donation prompts are probably more important than button colour.

4. Work out how to do it

So how do you practically and technically go about running a test? That might depend on what your testing.

With webpages, you can use software like Optimizely to easily create different variants of a preexisting page. It then randomly shows users each version. Also, some platforms such as Engaging Networks have their own in-built testing system.

On email, some platforms also have their own A/B testing feature. But either way, you can always easily duplicate your email, change one thing, and send both versions to different, randomly-selected segments of your list. You can choose two smaller segments, and then send the ‘winning’ email to the rest of your list. Or, you can simply split your list in half and send one version to each. You can then use the learnings for your future emails.

Facebook ads are best tested by creating separate ad sets with your variants as individual ads. Set the same budget and the same timeframe for each. (Facebook does have its own A/B test feature, but it’s best to trust your own data rather than Facebook’s.)

And don’t forget – make sure you have planned a way to track your results that you trust, whether that’s in-built reporting in your email tool, or using google analytics tracking codes.

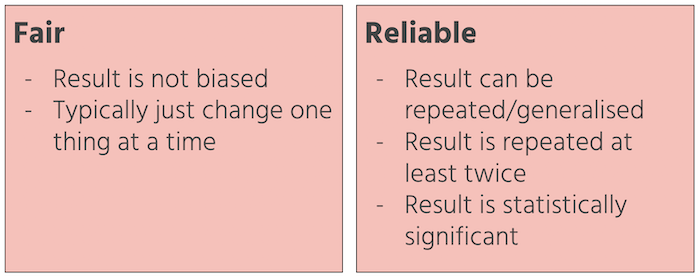

5. Ensure it’s a fair and reliable test

If you’re planning a test, you want to be sure you can actually act on any results. To do this, you need to make sure your test is fair and reliable.

A fair test means the result is not biased. For this, only change one thing at a time – otherwise you won’t know which change caused any difference in result. You also want to try and avoid other external factors impacting the result. For example, if you sent the two versions of an email at different times, it could impact the data.

A reliable test is one that can be repeated or generalised, and is therefore useful for you. It’s best practice to repeat a test twice before making any big changes.

An essential part of testing reliably is statistical significance. What is statistical significance, we hear you cry?

Calculating statistical significance tells us how likely it is your result was solely due to error. If a result is statistically significant, it is highly unlikely that your test result is solely due to error. This means you are safe to make decisions based on it.

If a result isn’t statistically significant, then you shouldn’t implement changes based on it. Calculating statistical significance is an absolutely essential part of A/B testing. Testing without it is pointless, and even potentially harmful as you might make changes based on faulty data.

But that’s ok, because it’s actually very easy to calculate and there’s no need to be a maths wizard. Free websites such as this one allows you to enter your results and check they are statistically significant. Ace!

6. Run the test, analyse the results, and plan next steps

Now comes the fun bit! You just need to run the test and analyse the results (including checking they are statistically significant).

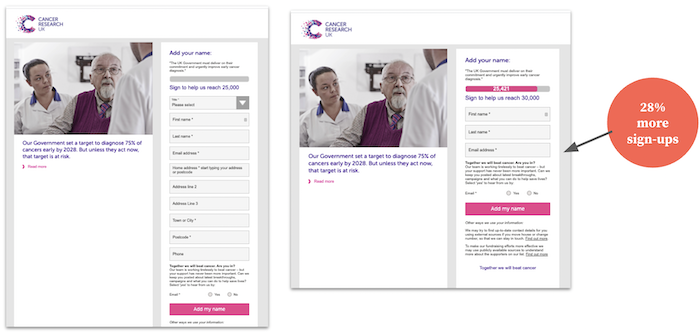

You might be surprised at how big a difference small tests can make. In the example below from Cancer Research UK, we made it easier to take the action by removing unnecessary form fields, and it increased sign-ups by 28%!

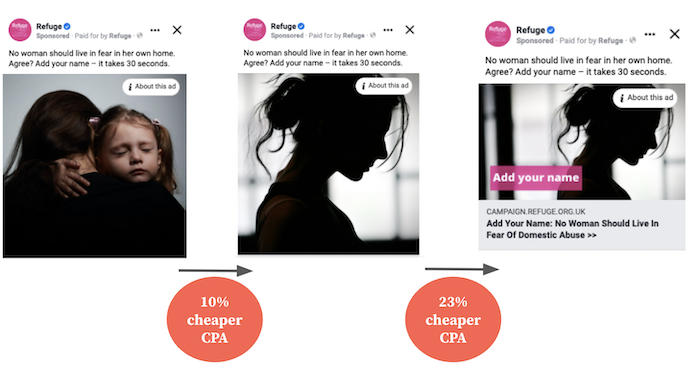

And here’s an example with Refuge of iterative Facebook ad image testing, increasing the motivation to take the action.. The original image test led to 10% cheaper CPA, and then making changes to that image meant a further 23% cheaper CPA.

But if you don’t get a clear result, or don’t see an improvement that’s ok! You’re still learning things, and you can always run another test.

Once you’ve completed the test, time to go back to the beginning and run another! Testing isn’t something you do once and forget about – you should always be learning. You might want to think about how you share your insights internally, whether that’s a slack channel, blog or round-up email to key people.

We hope you’ve enjoyed our webinar run down. Want to join our next webinar? Get our emails.

Q&A

There were so many excellent questions during the webinar. Here’s a summary of the themes that came up, as well as responses to the unanswered questions.

Q: How do you know which things might have the greatest impact to test and prioritise accordingly?

Start from your key strategic goals. For example, it might be that you have a healthy size list but donation conversions are low, so boosting fundraising from existing supporters is most important for your organisation right now than acquisition. Then you can start to think about all the elements relating to donations that you can test.

There are some potential tests that you can reasonably expect are more likely to make a bigger impact than others when creating a priority list. For example, your donation prompts are probably more important than button colour.

Thinking about your testing programme in terms of specific periods of time also helps. So, you could decide that you will focus on your donation page this month, or the next three months, and make sure you always have a test in the field in that period.

Q: How do you get buy-in organisationally for and manage expectations about testing internally?

Creating a culture of testing with clarity on what it entails is really important. Being clear about your strategic objective – ie. the problem you are trying to solve – aids internal alignment on what you are trying to achieve from the get go. Frame your testing programme with stakeholders as an iterative process of constant learning, and emphasise that the point is to get objective data from which you can then make informed decisions as an organisation. Stress that not every test will prove your hypothesis or lead to a big increase in conversions, but that by going through a logical process in a systematic way you will be learning more and more about what does (and doesn’t) work best. You may even consider prioritising some quick tests that’ll return results quickly or potential ‘low-hanging fruit’ to help embed a testing precedent. But don’t forget to always be geared towards your central objective.

Q: How worthwhile is email subject line testing and is it reliable with the changes to iOS and Mail Privacy Protection?

Subject line testing still has value for now, but other email tests are likely to be much more impactful – such as copy, structure, buttons and images. So don’t stop at subject lines! Subject line tests tend to optimise the specific email you are working on, but are less likely to lead to uncovering trends and insights from which you can implement changes in the long term.

The changes to iOs and Mail Privacy Protection will indeed gradually make open rates unreliable, so we recommend starting to identify different metrics to evaluate your email programme, such as click rate (eg. click:recipients, rather than click:opens – also known as ‘conversion rate’ or ’CTO’ – which is also affected by the changes). Learn more about these changes and what your organisation should do now on our blog.

Q: How big does a sample size need to be for email testing? Can you do it with a smaller list?

Undoubtedly it’s easier to get a statistically significant result with a larger sample size. However, that doesn’t necessarily mean you can’t learn through testing with a smaller email list. If testing on a smaller sample, try and choose tests which you think are likely to make a big difference. The bigger the change between the control and the variant, the more likely it’ll be statistically significant from a smaller sample. For example, it’d be hard to get a reliable result from something relatively minor such as having an image or not, but the framing of your ask is more likely to do so. Remember though still to only test one thing at a time! Also, free online sample size calculators help you work out the size you need.

Q: What’s the minimum time period or amount of traffic you need for a test?

This depends on the action you are testing. If we are testing two different sign-up pages to see which framing drives the most leads, we tend to spend c.£300 per variant on ads over 4-7 days. We would expect that to give us another data for a clear result.

We usually run donate page tests longer. Our approximate rule of thumb is that you will probably need around 20 donations per variant in order to provide a statistically significant result. So you can estimate the time you need based on your current donation rates. You can help make sure you’ll receive enough traffic by planning a series of donation emails pushing people to the relevant page.

Q: How do you go about testing newsletters, as they multiple content pieces?

If you want to drive engagement with your emails (and why wouldn’t you!), we strongly recommend moving away from newsletter-style emails and instead have one, central action you want people to take. We’re very confident you’ll get better results.

You can even test this yourself. Choose the main action you most want people to do in your newsletter, and test an email centred around that action against the newsletter. If you did want to test content within a newsletter, then fall back on the cardinal rule of only changing one thing per test.

Q: Do you have tried and tested things that have worked well?

There are definitely recurring trends that we’ve seen. For example, donate buttons with specific amounts trump generic ‘I’ll donate’ buttons, and creating social proof often boosts engagements. However, it’s important to remember that audiences and lists can behave differently, and it’s worth re-testing learnings on an on-going basis. We publish many test results and our data-informed best practice on our blog and in our webinars.